AWS 使用Athena 搜寻ALB Access Log教程

在使用Athena查询Access Log之前需要开启先,如果还没开启可以参考这个文章:https://www.pangzai.win/aws-alb-%e5%bc%80%e5%90%afaccess-log%e6%95%99%e7%a8%8b/

参考视频:https://www.youtube.com/watch?v=zXSiPmBgQZQ

参考官方文档:https://docs.aws.amazon.com/athena/latest/ug/create-alb-access-logs-table.html

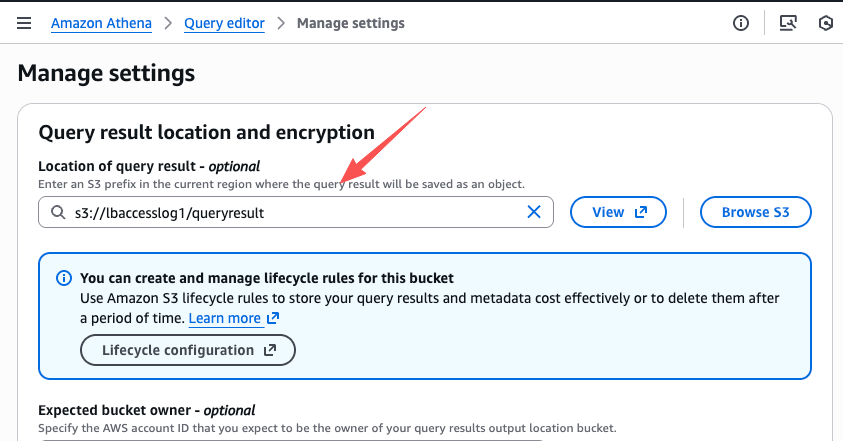

1. 头一次使用Athena的话,需要设定Athena query result的保存目录

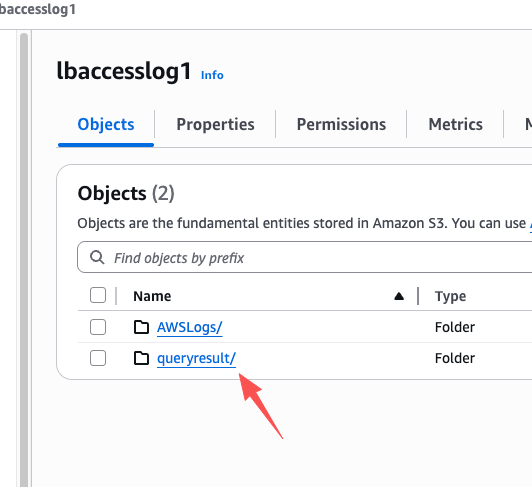

2. 我使用的是我的S3 Access Log的Bucket

你需要在同样的bucket创建你所选择的目录

3. 接着你需要在query input field 内创建ALB Access Log的table

YourBucketName 改成你的S3 Access Log bucket Name

112222333 改成你的AWS Account No

YourRegion 改成你的Access Log Region

CREATE EXTERNAL TABLE IF NOT EXISTS alb_access_logs (

type string,

time string,

elb string,

client_ip string,

client_port int,

target_ip string,

target_port int,

request_processing_time double,

target_processing_time double,

response_processing_time double,

elb_status_code int,

target_status_code string,

received_bytes bigint,

sent_bytes bigint,

request_verb string,

request_url string,

request_proto string,

user_agent string,

ssl_cipher string,

ssl_protocol string,

target_group_arn string,

trace_id string,

domain_name string,

chosen_cert_arn string,

matched_rule_priority string,

request_creation_time string,

actions_executed string,

redirect_url string,

lambda_error_reason string,

target_port_list string,

target_status_code_list string,

classification string,

classification_reason string,

conn_trace_id string

)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.RegexSerDe'

WITH SERDEPROPERTIES (

'serialization.format' = '1',

'input.regex' =

'([^ ]*) ([^ ]*) ([^ ]*) ([^ ]*):([0-9]*) ([^ ]*)[:-]([0-9]*) ([-.0-9]*) ([-.0-9]*) ([-.0-9]*) (|[-0-9]*) (-|[-0-9]*) ([-0-9]*) ([-0-9]*) \"([^ ]*) (.*) (- |[^ ]*)\" \"([^\"]*)\" ([A-Z0-9-_]+) ([A-Za-z0-9.-]*) ([^ ]*) \"([^\"]*)\" \"([^\"]*)\" \"([^\"]*)\" ([-.0-9]*) ([^ ]*) \"([^\"]*)\" \"([^\"]*)\" \"([^ ]*)\" \"([^\\s]+?)\" \"([^\\s]+)\" \"([^ ]*)\" \"([^ ]*)\" ?([^ ]*)? ?( .*)?'

)

LOCATION 's3://YourBucketName/AWSLogs/112222333/elasticloadbalancing/YourRegion/';

4. 创建好table你就能进行Access Log 的查询了,由于日志当中的时间的UTC+0的,所以我得sql是把他改成+8的马来西亚时区

select

format_datetime(

from_iso8601_timestamp(time) AT TIME ZONE 'Asia/Kuala_Lumpur',

'yyyy-MM-dd HH:mm:ss'

) AS malaysia_time,

client_ip,

request_url

from

alb_access_logs

where

client_ip in (

'113.23.77.22', '103.131.55.28',

'103.45.141.28'

)

and (

from_iso8601_timestamp(time) AT TIME ZONE 'Asia/Kuala_Lumpur'

) BETWEEN timestamp '2025-11-16 17:00:00'

AND timestamp '2025-11-16 17:02:00'

limit

10

![]()

Facebook评论