AWS 为已有的EKS安装Karpenter

参考视频:https://www.youtube.com/watch?v=dS6UIovSXpA

参考文档:https://karpenter.sh/docs/getting-started/migrating-from-cas/#update-aws-auth-configmap

- 已有的EKS必须至少要有一个node group

- 创建集群IAM OIDC身份提供商, 如果你已经创建了就无需再创建

eksctl utils associate-iam-oidc-provider --cluster yourClusterName --approve2. 在Terminal准备好variable

KARPENTER_NAMESPACE=kube-system

CLUSTER_NAME=<your cluster name>AWS_PARTITION="aws"

AWS_REGION="$(aws configure list | grep region | tr -s " " | cut -d" " -f3)"

OIDC_ENDPOINT="$(aws eks describe-cluster --name ${CLUSTER_NAME} \

--query "cluster.identity.oidc.issuer" --output text)"

AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query 'Account' \

--output text)3. 创建NodeRole

echo '{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "ec2.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}' > node-trust-policy.json

aws iam create-role --role-name "KarpenterNodeRole-${CLUSTER_NAME}" \

--assume-role-policy-document file://node-trust-policy.json

4. 为NodeRole添加policy

aws iam attach-role-policy --role-name "KarpenterNodeRole-${CLUSTER_NAME}" \

--policy-arn arn:${AWS_PARTITION}:iam::aws:policy/AmazonEKSWorkerNodePolicy

aws iam attach-role-policy --role-name "KarpenterNodeRole-${CLUSTER_NAME}" \

--policy-arn arn:${AWS_PARTITION}:iam::aws:policy/AmazonEKS_CNI_Policy

aws iam attach-role-policy --role-name "KarpenterNodeRole-${CLUSTER_NAME}" \

--policy-arn arn:${AWS_PARTITION}:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly

aws iam attach-role-policy --role-name "KarpenterNodeRole-${CLUSTER_NAME}" \

--policy-arn arn:${AWS_PARTITION}:iam::aws:policy/AmazonSSMManagedInstanceCore

5. 以下是创建ControllerRolePolicy和创建ControllerRole

cat << EOF > controller-trust-policy.json

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Federated": "arn:${AWS_PARTITION}:iam::${AWS_ACCOUNT_ID}:oidc-provider/${OIDC_ENDPOINT#*//}"

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"${OIDC_ENDPOINT#*//}:aud": "sts.amazonaws.com",

"${OIDC_ENDPOINT#*//}:sub": "system:serviceaccount:${KARPENTER_NAMESPACE}:karpenter"

}

}

}

]

}

EOF

aws iam create-role --role-name KarpenterControllerRole-${CLUSTER_NAME} \

--assume-role-policy-document file://controller-trust-policy.json

cat << EOF > controller-policy.json

{

"Statement": [

{

"Action": [

"ssm:GetParameter",

"ec2:DescribeImages",

"ec2:RunInstances",

"ec2:DescribeSubnets",

"ec2:DescribeSecurityGroups",

"ec2:DescribeLaunchTemplates",

"ec2:DescribeInstances",

"ec2:DescribeInstanceTypes",

"ec2:DescribeInstanceTypeOfferings",

"ec2:DescribeAvailabilityZones",

"ec2:DeleteLaunchTemplate",

"ec2:CreateTags",

"ec2:CreateLaunchTemplate",

"ec2:CreateFleet",

"ec2:DescribeSpotPriceHistory",

"pricing:GetProducts"

],

"Effect": "Allow",

"Resource": "*",

"Sid": "Karpenter"

},

{

"Action": "ec2:TerminateInstances",

"Condition": {

"StringLike": {

"ec2:ResourceTag/karpenter.sh/nodepool": "*"

}

},

"Effect": "Allow",

"Resource": "*",

"Sid": "ConditionalEC2Termination"

},

{

"Effect": "Allow",

"Action": "iam:PassRole",

"Resource": "arn:${AWS_PARTITION}:iam::${AWS_ACCOUNT_ID}:role/KarpenterNodeRole-${CLUSTER_NAME}",

"Sid": "PassNodeIAMRole"

},

{

"Effect": "Allow",

"Action": "eks:DescribeCluster",

"Resource": "arn:${AWS_PARTITION}:eks:${AWS_REGION}:${AWS_ACCOUNT_ID}:cluster/${CLUSTER_NAME}",

"Sid": "EKSClusterEndpointLookup"

},

{

"Sid": "AllowScopedInstanceProfileCreationActions",

"Effect": "Allow",

"Resource": "*",

"Action": [

"iam:CreateInstanceProfile"

],

"Condition": {

"StringEquals": {

"aws:RequestTag/kubernetes.io/cluster/${CLUSTER_NAME}": "owned",

"aws:RequestTag/topology.kubernetes.io/region": "${AWS_REGION}"

},

"StringLike": {

"aws:RequestTag/karpenter.k8s.aws/ec2nodeclass": "*"

}

}

},

{

"Sid": "AllowScopedInstanceProfileTagActions",

"Effect": "Allow",

"Resource": "*",

"Action": [

"iam:TagInstanceProfile"

],

"Condition": {

"StringEquals": {

"aws:ResourceTag/kubernetes.io/cluster/${CLUSTER_NAME}": "owned",

"aws:ResourceTag/topology.kubernetes.io/region": "${AWS_REGION}",

"aws:RequestTag/kubernetes.io/cluster/${CLUSTER_NAME}": "owned",

"aws:RequestTag/topology.kubernetes.io/region": "${AWS_REGION}"

},

"StringLike": {

"aws:ResourceTag/karpenter.k8s.aws/ec2nodeclass": "*",

"aws:RequestTag/karpenter.k8s.aws/ec2nodeclass": "*"

}

}

},

{

"Sid": "AllowScopedInstanceProfileActions",

"Effect": "Allow",

"Resource": "*",

"Action": [

"iam:AddRoleToInstanceProfile",

"iam:RemoveRoleFromInstanceProfile",

"iam:DeleteInstanceProfile"

],

"Condition": {

"StringEquals": {

"aws:ResourceTag/kubernetes.io/cluster/${CLUSTER_NAME}": "owned",

"aws:ResourceTag/topology.kubernetes.io/region": "${AWS_REGION}"

},

"StringLike": {

"aws:ResourceTag/karpenter.k8s.aws/ec2nodeclass": "*"

}

}

},

{

"Sid": "AllowInstanceProfileReadActions",

"Effect": "Allow",

"Resource": "*",

"Action": "iam:GetInstanceProfile"

}

],

"Version": "2012-10-17"

}

EOF

aws iam put-role-policy --role-name KarpenterControllerRole-${CLUSTER_NAME} \

--policy-name KarpenterControllerPolicy-${CLUSTER_NAME} \

--policy-document file://controller-policy.json

6. 为EKS Cluster使用的subnet和security group添加tag,那么karpenter才知道要用哪个资源

for NODEGROUP in $(aws eks list-nodegroups --cluster-name ${CLUSTER_NAME} \

--query 'nodegroups' --output text); do aws ec2 create-tags \

--tags "Key=karpenter.sh/discovery,Value=${CLUSTER_NAME}" \

--resources $(aws eks describe-nodegroup --cluster-name ${CLUSTER_NAME} \

--nodegroup-name $NODEGROUP --query 'nodegroup.subnets' --output text )

done

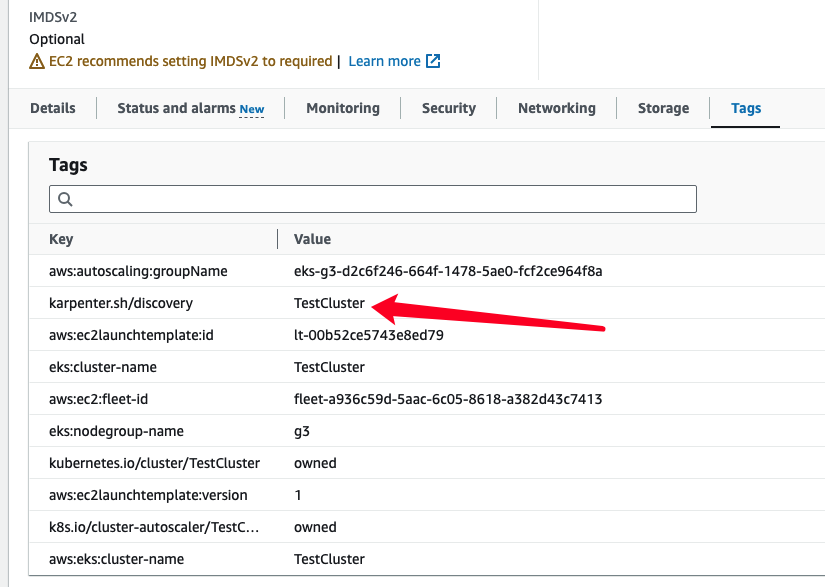

7. 为 default nodegroup当中的ec2添加tag,但是根据文档我使用以下的command就是无法添加tag,导致了karpenter deployment无法指定node来部署,所以我就使用了console来添加

karpenter.sh/discovery : 你的EKS Cluster Name【使用这些command无效,纯粹记录】

NODEGROUP=$(aws eks list-nodegroups --cluster-name ${CLUSTER_NAME} \

--query 'nodegroups[0]' --output text)

LAUNCH_TEMPLATE=$(aws eks describe-nodegroup --cluster-name ${CLUSTER_NAME} \

--nodegroup-name ${NODEGROUP} --query 'nodegroup.launchTemplate.{id:id,version:version}' \

--output text | tr -s "\t" ",")SECURITY_GROUPS=$(aws eks describe-cluster \

--name ${CLUSTER_NAME} --query "cluster.resourcesVpcConfig.clusterSecurityGroupId" --output text)

aws ec2 create-tags \

--tags "Key=karpenter.sh/discovery,Value=${CLUSTER_NAME}" \

--resources ${SECURITY_GROUPS}

8. 为EKS的 aws-auth ConfigMap资源添加创建好的role,否则无法超控EKS Cluster

eksctl create iamidentitymapping --cluster YourClusterName --region YourRegion --arn 你创建好的NodeRole的ARN --group system:bootstrappers,system:nodes --username system:node:{{EC2PrivateDNSName}}9. 部署Karpenter, 设定好需要使用的版本号

export KARPENTER_VERSION=v0.34.110. 使用helm把Karpenter.yaml 下载到本地

helm template karpenter oci://public.ecr.aws/karpenter/karpenter --version "${KARPENTER_VERSION}" --namespace "${KARPENTER_NAMESPACE}" \

--set "settings.clusterName=${CLUSTER_NAME}" \

--set "serviceAccount.annotations.eks\.amazonaws\.com/role-arn=arn:${AWS_PARTITION}:iam::${AWS_ACCOUNT_ID}:role/KarpenterControllerRole-${CLUSTER_NAME}" \

--set controller.resources.requests.cpu=1 \

--set controller.resources.requests.memory=1Gi \

--set controller.resources.limits.cpu=1 \

--set controller.resources.limits.memory=1Gi > karpenter.yaml

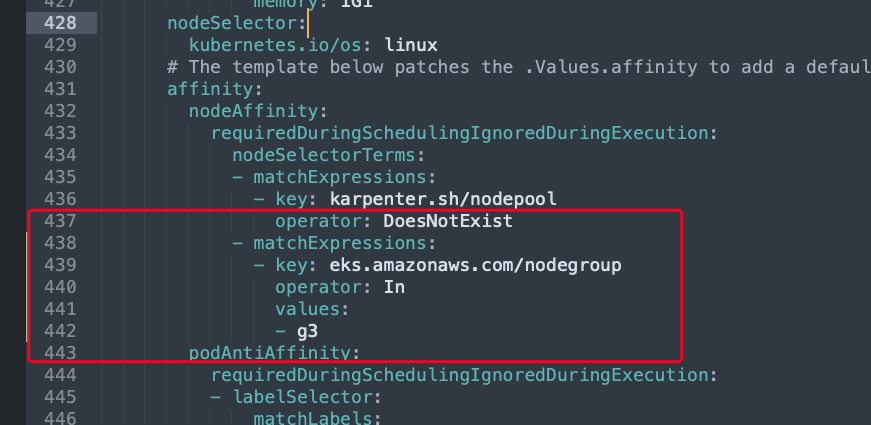

11. 更改karpenter.yaml文件,添加以下的代码,node group 就换成EKS的default node group名称

- matchExpressions:

- key: eks.amazonaws.com/nodegroup

operator: In

values:

- ${NODEGROUP}

12. 执行以下的command安装Karpenter

kubectl create namespace "${KARPENTER_NAMESPACE}" || true

kubectl create -f \

https://raw.githubusercontent.com/aws/karpenter-provider-aws/${KARPENTER_VERSION}/pkg/apis/crds/karpenter.sh_nodepools.yaml

kubectl create -f \

https://raw.githubusercontent.com/aws/karpenter-provider-aws/${KARPENTER_VERSION}/pkg/apis/crds/karpenter.k8s.aws_ec2nodeclasses.yaml

kubectl create -f \

https://raw.githubusercontent.com/aws/karpenter-provider-aws/${KARPENTER_VERSION}/pkg/apis/crds/karpenter.sh_nodeclaims.yaml

kubectl apply -f karpenter.yaml

13. 创建你的Karpenter default node group,可以自定义选择自己想要的spec

- 如果你的worker node 希望创建在private subnet的话那么, EC2NodeClass 的 subnetSelectorTerms就需要多加tag指向到需要使用的private subnet ( Private Subnet 需要添加tag, 比如 environment : private )

cat <<EOF | envsubst | kubectl apply -f -

apiVersion: karpenter.sh/v1beta1

kind: NodePool

metadata:

name: default

spec:

template:

spec:

requirements:

- key: kubernetes.io/arch

operator: In

values: ["amd64"]

- key: kubernetes.io/os

operator: In

values: ["linux"]

- key: karpenter.sh/capacity-type

operator: In

values: ["spot"]

- key: karpenter.k8s.aws/instance-category

operator: In

values: ["c", "m", "r"]

- key: karpenter.k8s.aws/instance-generation

operator: Gt

values: ["2"]

nodeClassRef:

name: default

limits:

cpu: 1000

disruption:

consolidationPolicy: WhenUnderutilized

expireAfter: 720h # 30 * 24h = 720h

---

apiVersion: karpenter.k8s.aws/v1beta1

kind: EC2NodeClass

metadata:

name: default

spec:

amiFamily: AL2 # Amazon Linux 2

role: "KarpenterNodeRole-${CLUSTER_NAME}" # replace with your cluster name

subnetSelectorTerms:

- tags:

karpenter.sh/discovery: "${CLUSTER_NAME}" # replace with your cluster name

securityGroupSelectorTerms:

- tags:

karpenter.sh/discovery: "${CLUSTER_NAME}" # replace with your cluster name

EOF

以下是使用ARM处理器的Yaml配置

apiVersion: karpenter.sh/v1beta1

kind: NodePool

metadata:

name: default

spec:

template:

spec:

requirements:

- key: kubernetes.io/arch

operator: In

values: ["arm64"]

- key: kubernetes.io/os

operator: In

values: ["linux"]

- key: karpenter.sh/capacity-type

operator: In

values: ["on-demand"]

- key: karpenter.k8s.aws/instance-cpu

operator: In

values: ["2","4","8","16"]

nodeClassRef:

name: default

limits:

cpu: 1000

disruption:

consolidationPolicy: WhenUnderutilized

expireAfter: 720h # 30 * 24h = 720h ![]()

Facebook评论